Securing Big Data in the Cloud

Section A

Introduction

Global Entertainments Company has expressed the interest of moving the centralized database, RDBMS, to the Big Data in Cloud. Such a move would however attract the needful measures which would help in containing the security vulnerabilities, as well as the relevant security threats that would attack the big data environments. The best way to design such measures is to first relook at the principles of Big Data in the Cloud and how they can be applicable to such a company like Global Entertainments. The preamble of these robust principles is captured in the prerequisite characteristics of Big Data, which include volume, velocity, variety, and variability. Each of these characteristics would lead to different lifecycle processes, which aid achievement of the needful efficiencies. Based on this, this will touch on a number of principles before relating them to the characteristics of Big Data in Cloud. For organisations undertaking such transitions, seeking expert guidance in data analysis dissertation help can ensure robust security implementations and effective utilization of cloud-based Big Data solutions.

Task 1: The Principles of Big Data in the Cloud

Scalability

The first principle is the scalability principle, which denotes the essence of the computing and storage capacity which should be available when demand arises. This is important for the purposes of keeping up with the volume of data as well as business needs. Under this principle, it should be noted that there is horizontal scaling in which there is addition of the nodes to the cluster and vertical scaling, in which more resources are added to the single (Hashem et al. 2015). The principle is importance for the case of Global Entertainments given the need to host the increasing volume of DVDs, online film streaming and other entertainments products which are increasingly on demand due to frequent requests from customers. The same goes to the increased number of the update inventory records and the online transactional processing system records as indicated by Global Entertainments (GE). However, cloud computing is believed to have missing features that would support database (RDBMS) related to the enterprise solutions (Yang et al. 2017). This would make Big Data in Cloud less attractive especially when it comes to large-scale applications. New developments like the use of HaCube is thought to solve the scalable issue related to large scale data.

Speed: Data transferability

The second principle is that of speed, which highlights that timely delivery of data should be the most primary design consideration. The As Fast as Possible or the Just in Time slogans should be the prime focus of Big Data in Cloud (Yang et al. 2017). This principle touches on significant items which are not limited to data generation, data transferability, data collection and data analysis. It is worth noting that data generation should happen at an accelerating pace before it can be analysed and later transmitted. The process should be instantaneous for the purposes of giving room for real time access to a wide range of the applications which rely on data (Begoli and Horey 2012). This principle of Big Data in Cloud applies to the case of Global Entertainments especially when conducting the sales and promotions phase. During this phase, regional managers would require the online analysis reports, for the purposes of monitoring the sales performance while rectifying actions based on the deviations in terms of performance. The same goes to the timely analysis reports which would help in making significant decisions for the company. However, attaining speed can still be linked to extra costs involved in securing the best service.

Security: Integrity and Privacy

Apart from the speed and the scalability principle, GE would be more concerned with the security principle. Based on this principle, it is asserted that all data, which is both in stationary or in motion, should be secured, governed and pave way for auditability and traceability. The security aspect would be added as part of the afterthought retrofit. At any point, the company would want to confirm data accountability, data security, data integration, and privacy (Hwang and Chen 2017). This is due to the fact that GE would want to boost confidence and autonomy among regional managers as they enhance their decision making capabilities for the organization. Regional managers would want to share or receive secure information as they develop insights regarding customer service as far as entertainment products are put into consideration (Hashizume et al. 2013). Notably, attaining data integrity and privacy can be challenging but the two aspects are equally important. While moving to cloud, the company needs to ensure that data modification is only done by authorized parties for the purposes of impeding misuse. Proliferation of most of the cloud based applications facilitates clients with the ultimate opportunity of storing as well as managing data in most of the cloud data centres (Shanmugasundaram, Aswini, and Suganya, 2017). In doing these, the company would still face few challenges when it comes to ensuring correctness of the user data (Yang et al. 2017). This is due to the fact that most of the users would not be physically available before they can access data. However, cloud would still provide means for the client to check whether the data is properly maintained or not.

Reliability

The last principle constitutes reliability and availability, which asserts that any data is critical to the business and should, therefore, be reliably captured, maintained and delivered in the proper form. Every architect would be required to research on the components a customer would want in relation to a specific solution. GE would be heavily concerned about the availability of data regarding the available DVDs or any online film streaming. They would also be concerned whether the regional managers are accessing appropriate materials or not (Hwang and Chen 2017). This must be part be part of the reasons as to why the company would wish to move to Big Data in the cloud. Reliability and availability point at the resources of the system that is accessible on demand by the authorized person. The most critical issue with the cloud environment and the cloud service providers revolves around availability of data which is stored in the cloud (Hashem et al. 2015). For Big Data in Cloud to achieve this, it has to embrace the most open as well as popular standards; make use of the lightweight architectures and exposing the outcome or the results with the help of API. This is essentially inspired by such platforms like the Yahoo’s BOSS Web Services.

Take a deeper dive into Cryptographic Methods and Vulnerabilities with our additional resources.

Task 2: The security risks within IAAS, PAAS and SAAS

Cloud computing would deliver cloud computing services like databases, servers, software, intelligence, analytics and storage among others. Cloud computing platforms carry with them the most flexible resources, economies of scale, parallel data processing, faster innovation, and heavy computation (Rittinghouse, and Ransome, 2016). An array of the cloud computing services is considered to be vast but most of them would fall into three categories. Ideally, GE can access the Software as a Service (SaaS), Platform as a Service (PaaS) and Infrastructure as a service (IaaS) (Pancholi, and Patel, 2017). Notably, SaaS represents a significant cloud market as the third-party interfaces and vendors maintain most of the applications whereas IaaS can be used to provide the most self-servicing platform meant for accessing, managing and monitoring most of the remote data centre (Ahmed and Saeed 2014). Other on the hand, PaaS can provide the hardware as well as software tools, mounted on the internet, used by most of the developers in building customized applications. While most of these platforms have their own advantages, they still posit security risks to the clients such as GE. In this context, the discussion will focus on the evaluation and discussion of the security risks associated with IaaS, PaaS and SaaS before picking on the most appropriate one for the company.

First, IaaS is known for facilitating consumers with fundamental access to most of the computing resources such as networks, virtualization, processing, and storage. Common IaaS platforms that GE can use include Cisco Metapod, Google Cloud Platform, Rackspace, DigitalOcean, HP Public Cloud, Amazon Web Services, Linode and IBM Cloud. Despite having a wide range of the supporting platforms, IaaS has frequently faced security risks (Manogaran et al. 2016). However, with IaaS, it is more difficult in guaranteeing compliance with the data management regulation. One has to account for the control over the most sensitive information in the system. Potential data loss is a potential risk in itself especially when one chooses to depend on the cloud service provider. Accessibility and flexibility of the cloud largely depends on the cloud service provider (Narang and Gupta 2018). Other security risks when using IaaS have been linked with virtualization, which gives room for the attackers to invade the system. Arguably, most of the attackers would compromise the entire migration module across the Virtual Machine Monitor as the content gets exposed to the network. As such, for GE, This would mean compromised confidentiality and integrity of the RDBMS. Other sources of risks include the virtual networks, virtual machine lifecycle, virtual machine rollback and the public Virtual Machine image repository.

Apart from IaaS, PaaS is another platform that allows either customers or clients to develop, run as well as manage the applications. The CSP would manage the middleware, networking, storage, virtualization and the operating system. Some of the PaaS platforms include Apache Stratos, Microsoft Azure, Heroku, OpenShift, Google App Engine and the AWS Elastic Beanstalk. If Global Entertainments picks on PaaS, then it is likely to face a number of the security risks (Ahmed and Saeed 2014). PaaS makes the client to be more susceptible to most of the server malfunctions as well as compliance issues. Gholami and Laure 2016 highlighted that with the appoach, third-Party relationships pose sources of security risks as a result of the mashups that compromise both the data as well as network security. This means that the users have to absolutely rely on third party services and the web hosted development tools for security. Moreover, Sultana and Raghuveer (2017) contended that development lifecycle in which developers would encounter complexities when it comes to building the secure applications, which comply with cloud features can threaten data privacy. Therefore, for GE, changes done on the PaaS components are likely to compromise security of the applications. Arguably, the underlying infrastructure security is a risk on its own. This implies that developers would not have an assurance of the security of the environment tools.

Lastly, GE have an alternative of using SaaS, which constitutes the ready-made software products that are built on cloud. Some of the products include Salesforce, Semrush, Office 365, Dropbox, Planning Pod and Asana. The platform is known for facilitating application services including the email services business applications like SCM, CRM, and ERP, and the conferencing software. Building from illustration by Hussein and Khalid (2016), security risk associated to SaaS can be linked to the application security in which flaws across the web applications can attract a wide range of vulnerabilities. Essentially, going by argument forwarded by Shen et al. (2019), security attacks target configurability through metadata, scalability, and multi-tenancy among others. For GE, data security is also another concern it has to make use of SaaS because cloud providers can easily subcontract some of the services like doing data backups among others. AlFawwaz (2017) on cloud computing security argued that subcontracting is in itself a security risk due to introduction of other parties, which may not be trusted by the clients or companies in this case. Another source of risk includes accessibility. Most of the applications are commonly accessed through the internet which utilizes network hence data can be predisposed to mobile malware, vulnerabilities found in some of the official applications and insecure networks.

GE can choose any of the three platforms. However, when the company makes use of SaaS and PaaS, it would have most of applications run in the cloud. This means that the security of data, being either processed, transferred or stored, would largely depend on the CSP. Making use of IaaS has an advantage over both SaaS and PaaS in that a client can have a direct access to the storage and the servers. This can still be outsourced with the help of virtual data centre (Amato et al. 2018). Most of the IaaS clients would be responsible for the data, the operating systems, runtime and even the applications. Despite having security risks mentioned in the discussion, most of the IaaS platforms remain robust and more flexible, are highly scalable, allow resources to be purchased where necessary, pave way for automations to the processing power, storage, servers and networking. With GE having a desire of allowing regional managers run most of the programs and functions, IaaS is more convenient and more robust to withstand additional tasks especially when demand arises (Manogaran et al. 2016). This is due to its focus on future growth and expansion.

Task 3: Big Data tools, solutions and security in the Cloud

Global Entertainments can benefit from the security, solutions, and tools that come with making use of Big Data in cloud. Most of the big data applications can be perceived as an advancement of the paralleled computing with exceptions of scale. The most fundamental factor across the big data analytical projects constitutes management of the resources. The platforms make use of virtualized hardware resources in the course of optimizing the significant trade-off between the outcome and costs. Big Data in the Cloud ride on a pool of tools. Some of these tools include Hadoop, Apache Spark, Apache Storm, Cassandra, RapidMiner, MongoDB, R Programming Tool, Neo4j and Apache SAMOA. Hadoop is regarded as a distributed programming as well as storage infrastructure that are developed based on open source implementation associated to MapReduce model (Ullah et al. 2018). Notably, MapReduce is regarded as the default-programming environment especially when developing data centric applications. In MapReduce, users are allowed to write the entire logic of the Mapper as well as the Reducer. The Hadoop file system is used as part of the unstructured data. The Hadoop Distributed File System (HDFS) is largely responsible for the process of breaking down larger data files into relatively smaller pieces.

Another tool provided by Big Data is the Apache Spark, which was initially developed as an alternative tool to Hadoop. Spark was essentially developed for significant applications in which keeping data in the memory would essentially enhance performance. The purpose of the tool narrows down to unifying the processing stack while batch processing would be attained with the help of the MapReduce. Significant applications in Spark would load the RDDs into the entire memory associated to the cluster nodes. Besides, Apache flink is another tool believed to offer the most functional programming interfaces, which are close to spark (Inukollu et al. 2014). Flink would attain high throughput as well as low latency, which allows it to process data quickly. The design of flink essentially targets large scale clusters associated to the thousands of the nodes that are added to the standalone cluster mode. As pointed by Thota et al. (2017), Flink would coordinate the checkpoints, schedule most of the tasks, and even coordinate the recovery in case of failures. In addition, Big Data can provide storm as a tool as well. It is regarded as a free open source processing computation framework. Storm is more appropriate for developing important applications including the real-time streaming analytics. Additional tools include the Apache samza, which is more significant for both messaging and streaming.

However, Big Data would provide to GE, it also has the capacity of providing solutions and security. It is believed that with time, business data will increase exponentially which might lead to challenges to do with storage capacities of the databases. Big Data in Cloud is already emerging as a research trend influenced by the research communities, the IT industries, and the current enterprises (Thota et al. 2017). Researchers have increasingly shifted attention to data technologies as well as solutions which are believed to emanate from the data driven insights and the innovative trend. As contended by Khosla and Kaur (2018), it is worth noting that most of the modern cloud computing platforms have given room for the big data analytic technologies, tools and the computing infrastructures, which are thought to enhance the process of data analysis. Big Data engineering is equally one avenue that has seen the growth of data manipulation technologies, which leverage the collection of coupled resources for the purposes of attaining linear scalability.

With security challenges in place, Big Data has equally been reasoned to be one of the robust solutions to the challenge. Most of the reviews have highlighted authorization, authentication as well as identify management as part of the efforts of Big Data in containing the security challenge. Authentication and authorization would first be proposed by most of the developers as far as credential classification is put into consideration. Essentially, the framework is tailored towards analysing as well as developing solutions meant for credential management (Stergiou and Psannis 2017). Building from this, Big Data is therefore in a good place to evaluate the evident complexity of most of the cloud systems, infrastructural organization, and classification of the credentials.

Besides, Big Data has significantly been assigned the role of establishing identity while paving way for access management. An integral federated identity management has been floated as one of forging a trust relationship between the SaaS domains and the users. At the same time, the E-ID authentication as well as uniform access to the cloud services is deemed as an extra effort which target identity management (Khosla and Kaur 2018). Further attention has been associated to confidentiality, availability, and integrity, which introduces a platform that gives room to the users to substantially verify the needful integrity of the virtual machines attaché to the cloud. This has strongly been associated to the concept of trusted cloud computing platform. Apart from issues of integrity, Big Data has secured a place in security monitoring as well as incident response.

Section B

Task 1: Tools and procedures for the above migration

Migrating existing database (RDBMS) into Big Data

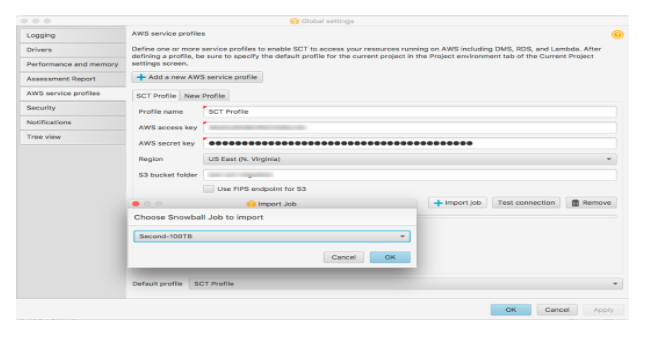

For the migration in Figure 2, several tools and procedures are needed. The notable tools include the AWS console which carries with it the snowball, EC2, S3 and the RDBMS. During the preparation, it is more advisable to create the Amazon S3 bucket and confirm the configuration of the Snowball Edge. The AWS snowball job would then be created with the help of the AWS management console.

Once the environment is created, the subsequent step demands configuration of the AWS SCT, which takes into consideration the AWS service profile as well as database drivers. The Global Settings would give room for addition of the new AWS service profile. The significant elements that need to be added to the service profile include the profile name, the S3 bucket folder, the AWS access key, region and the AWS Secret key.

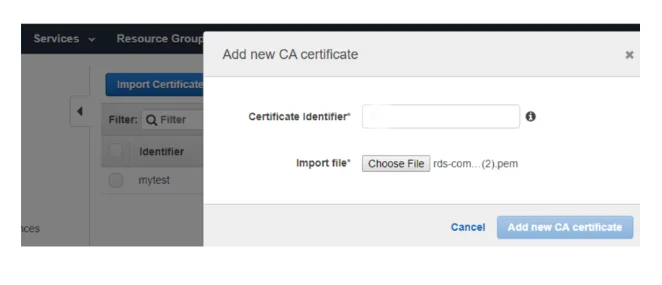

One can now click on the Test Connection for the purposes of ensuring the profile values are correct and the status of the test show Pass. Subsequently, one has to pick on Import Job and pick on the Snowball Edge job as shown below.

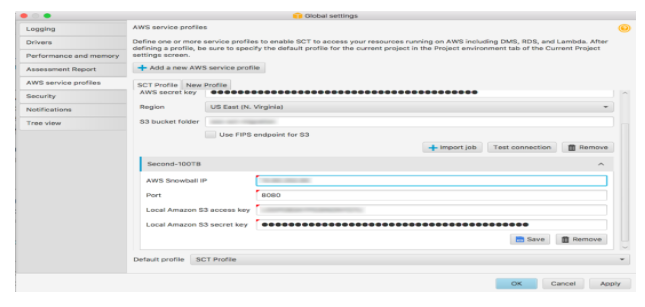

The platform is now ready for configuration of AWS SCT with the help of Snowball Ede. This can be attained by listening to the default port 8080, entering the IP address and the secret and access keys that were retrieved earlier.

At this point, one can use the target database and the source details in developing a new project as shown.

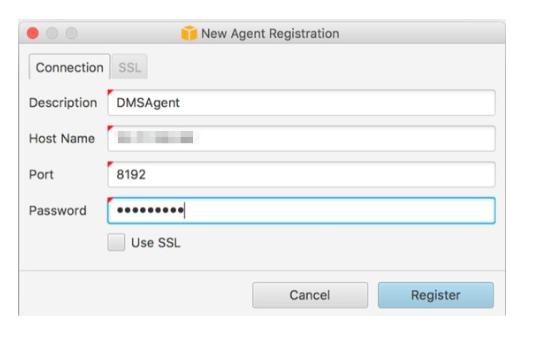

After creating the new project, the source as well as the target database drivers need to be installed on the available AWS DMS Replication Agent Instance. The ODBC drivers needed for source databases are on the replication instance. The latter to be configured before installing AWS DMS Replication Agent. In the execution phase, it is important to connect the AWS SCT to the set replication agent. In the navigation interface, one has to access the Database Migration View before picking on Register. The IP address, the password and the port number need to be specified.

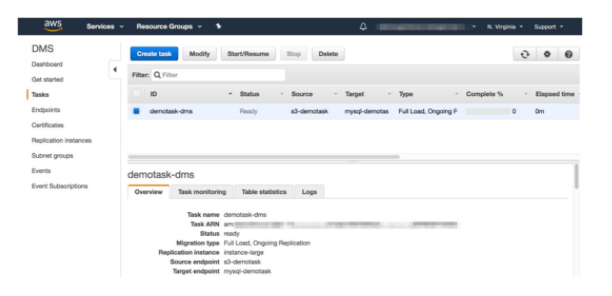

After the registration, one can create the local task and the DMS task.

Once the tasks have been created, the AWS DMS task would automatically begin loading the existing data into full load. Once all data is migrated and the process for replication is completed, then the application points to the new database.

Professional practices that are relevant legal, ethical and social issues

The cloud and Big Data environment has for a significant period of time attracted professional practices which can be aligned to legal, ethical as well as social issues. The most dominant practices are envisaged in Article 5 of the commonly known GDPR. In a nutshell, most of the principles would have a direct impact on processing of Big Data, the methods of collecting data as well as data retention. The most basic principles touch on the lawfulness, transparency and fairness. Transparency is both social and ethical, and has closely been associated to Big Data (Martin 2015). Notably, most of the users are rarely informed of the privacy policy. However, the data subject should be among the prioritized things that should be put into consideration as far as access to information is put into consideration. Another other notable practices are purpose limitation and data minimization. Notably, data should be both relevant as well as limited to the purpose highlighted at the time of data collection. Increased attention is given to integrity and confidentiality, storage limitation, accuracy and updating.

In addition to the highlighted principles, GDPR has its line of legal, ethical and social domains, which would count as part of the professional practices while handling Big Data and the cloud environment. The first domain touches on data protection and privacy in the context of Big Data. Based on the standards of GDPR, there is chain or the data protection principles that supports the highlighted domain when handling the Big Data environment (Mittelstadt and Floridi 2016). The ultimate principle of lawfulness purports processing of personal data needs to observe the legal ground. There is also the principle of transparency and fairness in which the controller is required to facilitate the necessary information or data to people regarding the processing of their personal data. On the other hand, there is a principle of the purpose limitation which calls for specificity of data to be collected while taking note of the legitimate reasons. Again, data minimization is another principle which asserts that personal data needs to be relevant, adequate and limited at the same time (Martin 2015). Other principles attached to the mentioned domain include the principles of storage limitation and accuracy, which limits incidents of erroneous scenarios that would be noted during data processing in the cloud or Big Data environment. The legality of this domain touches on the legal obligation as highlighted in Article 6(1) (c) in which the processes should be handled in accordance to the legal terms the controller is subjected to (Warren et al. 2018). It has also been argued that legitimate interests and consent would count as the minimum legal requirements which should be met by professional handling the Big Data and cloud environment.

The second domain defines the professional practices associated to Big Data and Cloud environment entails the intellectual property rights. Global Entertainments need to be versed with database rights as related to the database contents, the investments and the creation. The company has to take note of the moral rights and the EU specificities in which the EU facilitates lawful provisions aligned to the Database Directive and address the objective of harmonization. The copyright protection is commonly given to databases where the reason behind the arrangement or selection of the contents covers the intellectual creation of the author. While it can be challenging to establish the creation of the electronic databases which has software, copyright protection is now is extended to the algorithm which gives room for specific data and types of data (Riva et al. 2018). This is only application to big data than any database. Another type of protection is covered under the sui generis right as envisaged in the Database Directive.

GE has to understand that this right was developed for the purposes of impeding free riding on the investments done by someone else. In line with Database rights, the company needs to institute the Data Integrity Professional Board which would cover the scope of Big Data Analytics. This means that most of the professional data scientists would be required in implementing the necessary solutions aligned to the database rights. The Big Data professions ranging from data analytics specialists, data scientists and the BI analytics specialists need such a platform to reach the necessary talent management, Big Data decisions, leadership requirements and technological requirements felt appropriate for running the cloud environment. Furthermore, this domain gives room for communication of the data stewardship practices which highlights the responsibilities of the firm towards the downstream recipients and clients. In addition to ensuring security risks are taken into consideration, the company has to ensure no unauthorised or unintended activities such as misuse of administrative privileges that include access rights (Bennink, 2018; Thakar, and Szalay, 2010). As such, should priorities on the structure and configurations emphasising on protection of data and privacy. In essence, this should be in line with identifying the risks, and conforming to standards outlined by legal frameworks. However, it should be noted that incorporating to excessive security measures might result in redundancy in the system operations compromising the whole idea of safety and effectiveness of the system.

The third domain underlines the essence of trust, free will and surveillance conducted by the company as part of the professional practices. The domain is legally, ethically and socially bound while measuring the trust in big data. It is worth noting that the slogan of “big data for trust” would be relevant for Global Entertainments as far as reputation systems are put into consideration (Riva et al. 2018). The reputation systems consistently question the collection and preparation, storage and communication and computation as well. In the modern era, a range of the web applications including the content communities, online social networks and the ecommerce platforms are believed to have attracted huge amounts of the reputation data which is being constructed or developed from big data. Phases of reputation systems constitute the process of weighting, filtering and even aggregation. It should also be noted that the immediate domain which is associated with reputation systems is the Ethical Data Management Protocol (Mittelstadt and Floridi 2016). The central objective of this domain is to enhance transparency while making people to be versed with the level of compliance as far as the EU law associated with Big Data holders is put into consideration. The idea behind this professional domain entails supporting Global Entertainments in understanding the European certification system. The certification covers the most significant principles associated with GDPR. The principles are not limited to data protection through default and design, data portability, special care given to health data and sensitive data as well as data minimization.

The company has to understand that the certification process has to cover such issues, which are not limited to data breach, unethical practices, unlawful practices, algorithm bias and data quality, user freedom and the communication problem. Notably, data breach and loss points at the risks of violation of the entire database, which carries personal and more sensitive data linked to employees and customers (Riva et al. 2018). Therefore, the initial assessment details the picture related to the situation while providing quantification over the likelihood of advantages and risks related economic impacts. Quantification would largely tap into security techniques, information technology as well as the information security management systems (Jennette Chalcraft 2018). The subdomains cover Data Management Statement, which demands that the company should describe the significant previsions associated to the next period, the adopted policies and the qualitative descriptions attached to the collected data. This also touches on professional declaration of the data sharing agreements. The DSA points at the formal requirements, which insists on the applicable law which needs to be executed between the data processor and the data controller. Further requirements constitute the ultimate formation of the necessary contract, termination as well as liability. Besides, attention is given to the capacity of the signatories and the assignment, which denotes the rights that parties have towards the DSA and the necessary obligations assigned to the third party.

Looking for further insights on Role of Line Charts in Visualizing Historical Asset Data? Click here.

References

Ahmed, E.S.A. and Saeed, R.A., 2014. A survey of big data cloud computing security. International Journal of Computer Science and Software Engineering (IJCSSE), 3(1), pp.78-85.

AlFawwaz, B.M., 2017. Impact of Security Issues in Cloud Computing Towards Businesses. The World of Computer Science and Information Technology Journal (WSCIT), 7(1), pp.1-6.

Amato, F., Moscato, F., Moscato, V. and Colace, F., 2018. Improving security in cloud by formal modeling of IaaS resources. Future Generation Computer Systems, 87, pp.754- 764.

Begoli, E. and Horey, J., 2012, August. Design principles for effective knowledge discovery from big data. In 2012 Joint Working IEEE/IFIP Conference on Software Architecture and European Conference on Software Architecture (pp. 215-218). IEEE.

Bennink, P.G.J., 2018. Automated analysis of AWS infrastructures.

Gholami, A. and Laure, E., 2016. Big data security and privacy issues in the cloud. International Journal of Network Security & Its Applications (IJNSA), Issue January.

Hashem, I.A.T., Yaqoob, I., Anuar, N.B., Mokhtar, S., Gani, A. and Khan, S.U., 2015. The rise of “big data” on cloud computing: Review and open research issues. Information systems, 47, pp.98-115.

Hashizume, K., Rosado, D.G., Fernández-Medina, E. and Fernandez, E.B., 2013. An analysis of security issues for cloud computing. Journal of internet services and applications, 4(1), p.5.

Hussein, N.H. and Khalid, A., 2016. A survey of Cloud Computing Security challenges and solutions. International Journal of Computer Science and Information Security, 14(1), p.52.

Hwang, K. and Chen, M., 2017. Big-data analytics for cloud, IoT and cognitive computing. John Wiley & Sons.

Inukollu, V.N., Arsi, S. and Ravuri, S.R., 2014. Security issues associated with big data in cloud computing. International Journal of Network Security & Its Applications, 6(3), p.45.

Jennette Chalcraft CPA, C.A., 2018. Drawing ethical boundaries for data analytics. Information Management, 52(1), pp.18-25.

Khosla, P.K. and Kaur, A., 2018. Big Data Security Solutions in Cloud. Data Intensive Computing Applications for Big Data, 29, p.80.

Manogaran, G., Thota, C. and Kumar, M.V., 2016. MetaCloudDataStorage architecture for big data security in cloud computing. Procedia Computer Science, 87, pp.128-133.

Martin, K.E., 2015. Ethical issues in the big data industry. MIS Quarterly Executive, 14, p.2.

Mittelstadt, B.D. and Floridi, L., 2016. The ethics of big data: current and foreseeable issues in biomedical contexts. Science and engineering ethics, 22(2), pp.303-341.

Narang, A. and Gupta, D., 2018, September. A Review on Different Security Issues and Challenges in Cloud Computing. In 2018 International Conference on Computing, Power and Communication Technologies (GUCON) (pp. 121-125). IEEE.

Pancholi, V.R. and Patel, B.P., 2017. A Study on Services Provided by Various Service Providers of Cloud Computing. Advances in Computational Sciences and Technology, 10(6), pp.1725-1729.

Rittinghouse, J.W. and Ransome, J.F., 2016. Cloud computing: implementation, management, and security. CRC press.

Riva, F.M., Abbatemarco, M. and Cervino, A., 2018. Big Data Research in Social Media. Legal, Ethical and Social Responsibility challenges. Electronic Journal of SADIO (EJS), 17(2), pp.66-82.

Shanmugasundaram, G., Aswini, V. and Suganya, G., 2017. A comprehensive review on cloud computing security. In 2017 International Conference on Innovations in Information, Embedded and Communication Systems (ICIIECS) (pp. 1-5). IEEE.

Shen, J., Zou, D., Jin, H., Yuan, B. and Dai, W., 2019. A domain-divided configurable security model for cloud computing-based telecommunication services. The Journal of Supercomputing, 75(1), pp.109-122.

Stergiou, C. and Psannis, K.E., 2017. Efficient and secure big data delivery in cloud computing. Multimedia Tools and Applications, 76(21), pp.22803-22822.

Sultana, A. and Raghuveer, K., 2017. Security Risks in Cloud Delivery Models.

Thakar, A. and Szalay, A., 2010, June. Migrating a (large) science database to the cloud. In Proceedings of the 19th ACM International Symposium on High Performance Distributed Computing (pp. 430-434).

Thota, C., Manogaran, G., Lopez, D. and Vijayakumar, V., 2017. Big data security framework for distributed cloud data centers. In Cybersecurity breaches and issues surrounding online threat protection (pp. 288-310). IGI global.

Ullah, S., Awan, M.D. and Sikander Hayat Khiyal, M., 2018. Big Data in cloud computing: a resource management perspective. Scientific Programming, 2018.

Warren, E., Justice, C. and Supreme, U., 2018. Legal, Ethical, and Professional Issues in Information Security. Retrieved 31st January.

Yang, C., Huang, Q., Li, Z., Liu, K. and Hu, F., 2017. Big Data and cloud computing: innovation opportunities and challenges. International Journal of Digital Earth, 10(1), pp.13-53.

What Makes Us Unique

- 24/7 Customer Support

- 100% Customer Satisfaction

- No Privacy Violation

- Quick Services

- Subject Experts