The Future of Telecommunications

The Exponential growth in telecommunication application services and the demand for high speed data processing that required low latency and power consumption create a dilemma in traditional access networks. Hereby, data rate processing and energy saving issues are now becoming one of the interesting research topics to devise the technological solutions to overcome these issues in access networks.

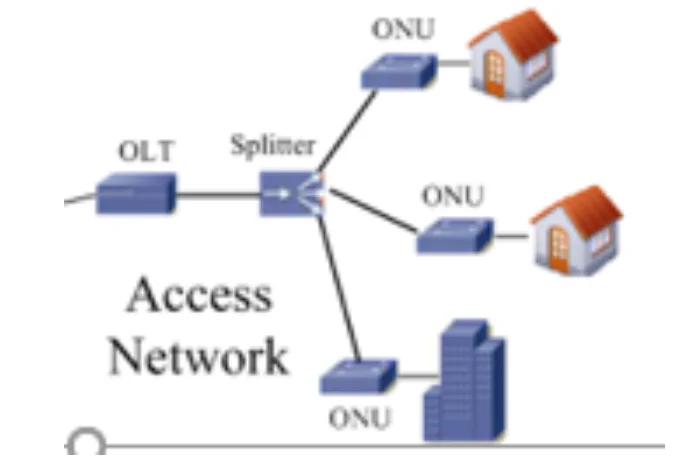

Access network is the “last mile” of a telecommunication network interconnecting the Central Office (CO) with the end users, encompassed the biggest part of the telecommunication networks. It is considered as a major consumer of energy due to the participation of a massive number of active elements [1]. Optical access networking such as PON (passive Optical Network) in data centers recently has been considered as the optimal solutions for energy saving and also, for the data processing to overcome latency issues.

It is more energy efficient as compared to other access networking technologies since it used passive elements to serve the energy. Moving toward, the optical network data center infrastructure is the right step to save a 75% of energy according to US Department of Energy vision and report in 2009 [2][3]. PON is a telecommunication technology that using optical fiber to transfer multiple services to the end user in a point-to-multipoint form, featured by unpowered elements in the path from sources to destinations reducing the power consumption and using the unpowered devices such as splitters to enable the signals to provide switching, routing, and interconnection[3], [4].

There are three main PON standards: Broadband PON (BPON) is based on ATM (Asynchronous Transfer Mode) as transmission protocol and supports data transfer rate of 622 Mbps for downstream and up to 155 Mbps for upstream. Ethernet PON (EPON) rely on Ethernet as transmission protocol with transmission speed up to 1.25 Gbps for downstream and upstream. Gigabit PON (GPON and XGPON) is based on Ethernet, ATM, and TDM as transmission protocol with different transmission speeds, for GPON Up to 2.5 Gbps for Optical downstream and 1.25 Gbps for upstream and for XG-PON Up to 10 Gbps for downstream and 2.5 Gbps for upstream [5][3].

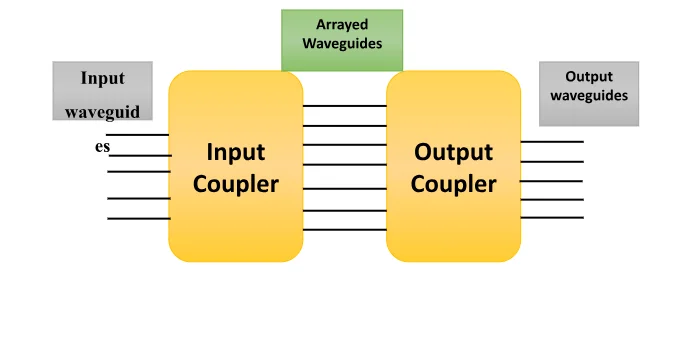

A PON, as shown in fig1, consists of Line Terminal (OLT) located at the service provider’s central office that is initiating and controlling the ranging process connected to the splitter through fiber , optical network units (ONU) or Optical Network Terminal(ONT), close to the end users and the fibers and splitters between them formed point to multipoint architecture , called the Optical Distribution Network (ODN) [3][4][6].Typical PON devices include the Arrayed Waveguide grating Router (AWGR), coupler/splitter, and Fiber Bragg grating [3][7].

Arrayed Waveguide Grating

AWG consists of two multiport couplers, one at the input and one at output, interconnected by an array of waveguides. AWG has several functions. It can be an n × 1 wavelength multiplexer. In this one, n is the input and 1 is the output device and the n inputs are different signal combined onto one signal 1. The AWG can be a 1x n wavelength demultiplexing which is the inverse function for the previous one. AWG can be used as add-drop multiplexer. In an add-drop multiplexer, the date is dropped when different new data are added to replace the dropped ones [3], [7].

Fiber Bragg Gratings

The process, involving the interference between optical signals initiating from the same source but having different phase shift is described by the term grating. For separating wavelengths, the grating is used as demultiplexer and also it can be used as multiplexer to combine them in WDM communication system [3], [7].

Coupler/Splitters

The couplers are exceedingly used in optical access network to combine and split the signals. A coupler is a device with diverse applications in an optical access network. Combining or splitting the wavelength signals is the simplest one. Most PON devices for instance couplers are reciprocal devices in their functions, meaning that when the input signals and output signals are reversed, the devices work exactly in the same technique. However, preventing a reflection going through one of the directions in many systems required nonreciprocal devices such as isolators, which are used mostly at the optical amplifiers and lasers [3], [7].

Continue your journey with our comprehensive guide to HR Operations and Learning Interventions.

PON is classified into three multiplexing techniques:

The First one is Time Division Multiplexing PON (TDM-PON), TDM- PON uses two wavelengths signal; one for upstream from ONUs to OLT and the other for downstream from OLT to ONUs [6], [8]. The second one is Orthogonal Frequency Division Multiplexing PON (OFDM-PON), OFDM-PON uses also two wavelengths; one for upstream while the other for the downstream where the available bandwidth is shared by the ONUs at the end users[6], [9]. The last one is Wide Division Multiplexing PON (WDM-PON), in this techniques, each ONUs is specified a pair of wavelength signals dedicated for the uplink and downlink[8] . However, there has been a few limitations facing WDM-PON such as Wavelength scalability, photo detectors deploying and bandwidth utilization. In order to overcome these limitations, the Hybrid WDM-TDM designs have been proposed, in these designs, wavelengths are assigned and shared by multiple ONUs placed at multiple PONs. The capability of turning to different wavelengths allowing ONUs to join other TDM-PONs which enhance the bandwidth utilization [3], [10]. Since the intensive growth in telecommunication application services and the demand of high speed in data processing requires a low latency and energy saving, new research topics are being investigated or proposed to devise optimal technologies solution to overcome these issues. Optical access network provide a applicable solution coping with emerging issues with the current data center network such as the exponential increase in the data traffic driven the Optical access network to offer a high throughput, low latency and reduced energy consumption compared to traditional or the current networks based on commodity switches [11]. Kachris and Tomas in [11] proposed a novel approach used an Ethernet switches for intra-rack communication (between servers located within the same racks) using an Ethernet top of rack ToR electronic switch and wavelength division multiplexing WDM PON for inter-rack (between servers located in the different racks ) using AWGR. The performance evaluation based on the explained design reduces the power by 10% with slight increase on the packet delay. In order to facilitate inter and intra rack communications between servers and also to provide a high speed, energy efficiency and also to support connectivity inside the data centers, five novel designs were proposed in [3] using mostly passive optical devices with more than a single route between servers . One of the designs discussed the oversubscription issues that is facing the inter-cell communication in the AWGR PON date center architecture. In order to overcome these issues, this design introduces 2-tiers of AWGRs to support multipath routing and energy-efficient for inter-cell communication. The results present that with the SDN architecture, the energy consumption can be reduced by up to 90% with typical average data rates [3]. Another novel designs in [3] shows that PON data centre networks have the capability of reducing power consumption by 85 percent in comparison to the Fat-Tree architecture and by 93% in comparison to the Bcube architecture. The author in design option 3 in [3] optimized the wavelength assignment and routing of the PON architecture and observed significant reduction in the energy consumption and cost compared to the systems that were previously used by using an intermediate AWGRs and cancelling the need of some additional optical backplanes, FBG or star reflectors in intra communication since it can be provisioned by same AWGRs .

THE METRO NETWORKS

As long as the services providers are able to improve their metro networks, the future will be more exciting for them in the rolling out of 5G services. Building a smart metro network to support the traffic from the heterogeneous 5G access networks, while addressing the capacity, low-latency and power consumption specifications, would be vital (cttc,2017) [12]. The metro network is the part of network domains (Access network, Metro network, and Core Network), and metro network is typically span to metropolitan areas. Metro network connects equipment in order to assemble the subscribers data traffic by providing interfaces to different access network such as Digital Subscriber Line (xDSL) and Fiber-to-the-Home (FTTH) and also provide a connection to the internet through the core network[1]. Synchronous Optical Networking (SONET), Optical Wavelength Division Multiplexing (WDM) ring, and Ethernet Metro are the three predominant networking technologies in Metropolitan regions as shown in Fig 1 [1].

Metro Ethernet Networks:

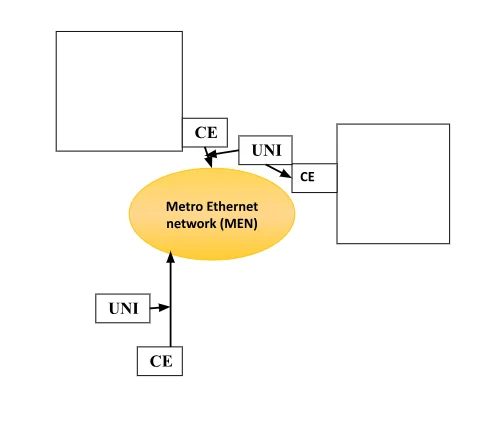

A lot of services providers recently provide Metro Ethernet services and some of them have span expanded their services beyond the metropolitan areas to cover the wide areas. Ethernet Services are provided by the Metro Ethernet Network (MEN) provider and Customers Equipment (CE) connect to the network using User-Network Interface (UNI) using different standards 10Mbps, 100Mbps, 1Gbps or 10Gbps Ethernet interface as shown below in Fig 2 which is the basic model for metro ethernet [13][1].

The Metro Ethernet are capable of handling a multiple subscribers (UNIs) that is attached to the MEN from a same building or single location (site) [13]. There are two types of Ethernet services illustrated in [13] , Ethernet line (E-line) which is point to point service and Ethernet-LAN(E-LAN) which is multipoint–to-multipoint service. Metro Ethernet is based on edge router to connect to external networks, broadband network gateway which is the access point for subscribers [14], and Ethernet switches which are the basic components used on metro network infrastructure[1] .

SONET/SDH Optical Metro Networks:

Synchronous optical networking (SONET) and synchronous digital hierarchy (SDH) are standardized protocols used to carry multiple digital bit signal synchronously over optical fiber which is proposed by Bellcore (Bell Communications Research) in 1985. Disseminating the standard optical transmission signal interfaces to provide high speed transmission by using a single mode fiber interface which was the underlying reason behind developing the SONET idea which provide direct optical interfaces on terminals and new network features [15]. The legacy SONET/SDH ring infrastructures still the majority of today's metro area networks infrastructures which is essential problem to keep pace with the increasing in date traffic since this infrastructure that was developed for narrowband and circuit switched voice traffic. There are two basic parts in the legacy SONET/SDH architecture which are metro core and metro access ring, interconnected by SONET/SDH add/drop multiplexers (ADMs) and digital access cross-connect system (DACS) as shown in Fig 3 [16]. This voice-based legacy architecture, which is not suitable for data traffic, is optimized via the introduction of additional switches or routers that map data into TDM channels before communication takes place over the legacy architecture. These additional components reduce the effectiveness of data centric metro networks by increasing complexity and number of network tiers[16].

METRO WDM RING NETWORKS

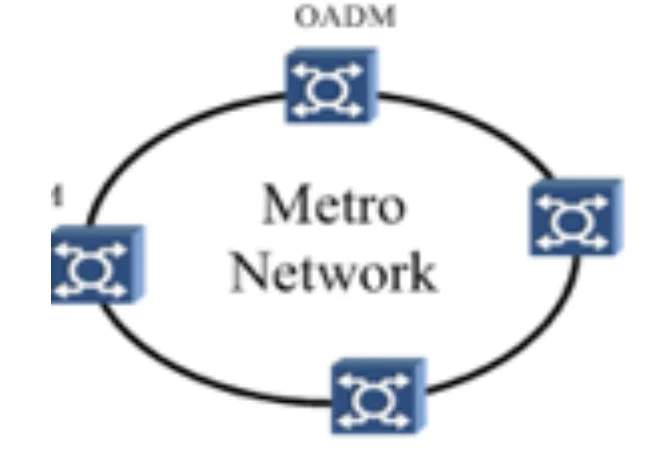

The intensive growth of the applications and telecom services require high-throughput metro networks which have capacity to support the foreseeable bandwidth demands. Fiber-optic technology can be the optimal solutions because its features and capabilities such as, high speed of data rate, massive bandwidth, low signal attenuation, low power requirements and low cost[17]. Metro WDM ring networks is designed to take the advantages of the fiber optic technology, such as higher speed and massive bandwidth [1]. The substantial reason behind placing the WDM in Metro networks is the ability of sending various data types simultaneously each one of them using a unique wavelength over fiber network in the form of light by using a single fiber for different types instead of using multiple fibers as shown in Fig 4 [18].

Energy consumption in the metro network primarily comes from the Optical Add-Drop Multiplexers (OADMs) , which assumes the role of adding and dropping optical signals[1]. For energy conservation in the metro network, The authors in [19] compare the three optimal power-saving designs of unidirectional WDM ring using three network architectures ; First-Generation optical network (FG), the Single-Hop network (SH), and the Multi-Hop network (MH) architectures . In the FG architecture, the optical network is designed in a way that all the nodes can process incoming and outgoing traffic. For the SH architecture, each node operates by processing only the traffic that passes into the network or out of it. The MH optical network is designed to lie in between the two architectures. For all the three architectures of the unidirectional WDM ring network, integer linear programming (ILP) formulations are used to optimize the consumption of energy. Reducing the power required for both electronic and optical components is required in order to achieve power saving in network designs .The results in [19] show that the power consumption in the (SH) network is low compared to the (FG) network not only when the optical components use low power consumption , but also when the data rate close to the capacity of wavelength. The result in [19] also shows the MH network is designed to outperform the SH and FG networks because its capability of exploiting optical transparency and handling traffic multiplexing while saving energy .

CORE NETWORKS:

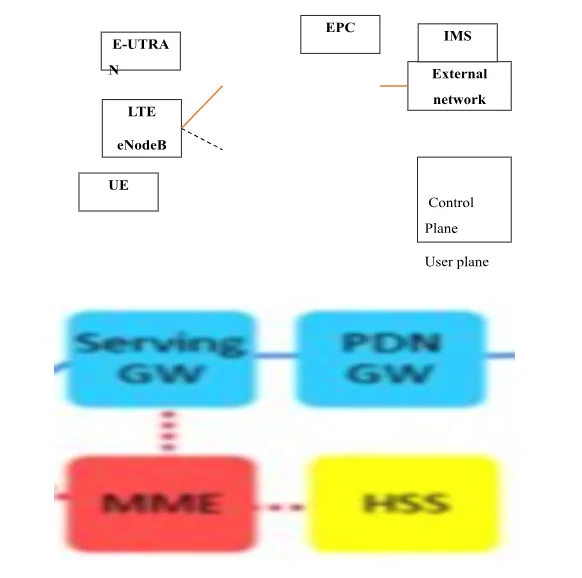

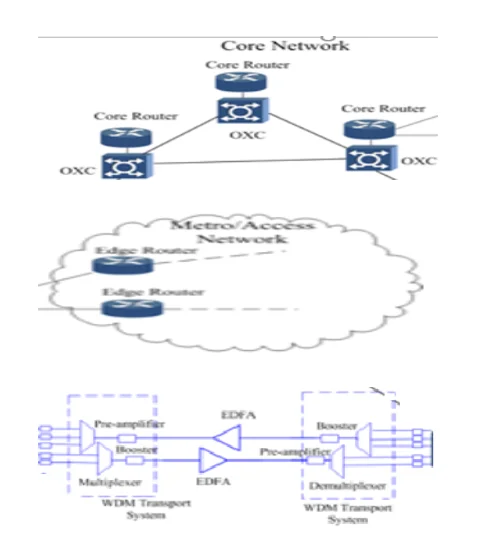

In a bid to meet an explosive growth in data traffics since the growth in application services, the demand of high-speed data processing, and the energy saving required to reduce the power consumption, the core networks should be prepared and should have an appropriate infrastructure to provide these requirements. Core network or the backbone infrastructure interconnects the wide areas such as large cities (network nodes) and extend to wider regions such as countries, continents and also to intercontinental distances. Core networks collect massive data traffics through different types of network domains (Metro network, Access Network) based on a Mesh interconnection pattern. Thus, it is a crucial step to provide suitable interfaces capable of handling, collecting, and distributing a huge amount of data traffics through the access or the metro networks which are responsible for collecting and distributing traffic between end users separated by a distance and communicated through core networks [1]. The Evolved Packet Core (EPC), the core network architecture, is the last evolution of 3rd Generation Partnership Project (3GPP) which is designed to use IP (Internet Protocol) as the key protocol to transport data traffics. In previous generations of telecommunication, the architecture of core network based on, circuit switched in Global System for Mobile communication (GSM) architecture, and packet switched added to circuit switched in General Packet Radio Services (GPRS) architecture. However, the 3GPP decided to eliminate the circuit switched domain and the EPC architecture should be an evolution of the packet switched used in GPRS [20]. EPC is designed to separate the user plane which defines the components that forward the traffic from the end users’ equipment to the external networks and the control plane which is in charge of routing and carry the signals. This separation is used to make the scaling independent, so the services providers will be capable of dimensioning and adapting their networks in a velvet glove [20]. The EPC architecture interconnect with User Equipment (UE) through Evolved Universal Terrestrial Radio Access Network E-UTRAN (LTE access network) while the Evolved NodeB known by (eNodeB) is the base station of LTE system .The EPC architecture consist of four elements as shown in Fig 1[20] : Serving Gateway (S-GW) deals with user plane for transporting data traffics between user equipment and external networks that include IP Multimedia Core Network Subsystem (IMS), the Packet Data Network Gateway (PDN GW or P-GW) connects EPC architecture with the external IP networks, the Mobility Management Entity (MME) deal with control plane to control the signaling of mobility for E-UTRAN, and the Home Subscriber Server (HSS) which is in charge of user information and user security [20].

The core networks architectures or EPC in modern technologies represent the central part of the telecommunication network hierarchy providing a global coverage as shown in Fig 2 [1].

In a bid of supporting the basic physical infrastructure, achieving high speed in data process, high capacity, and energy saving, deploying the optical technologies such as IP (Internet Protocol) over SONET / SDH, IP over WDM, or IP over SONET/SDH over WDM would be vital to obtain these requirements [1]. The intensive growth in data traffics back in the 90s was the motivation behind transporting the IP traffics over synchronous optical network (SONET). IP over SONET provides data rate ranging from 51 Mb/s Optical Carrier transmission rates (OC- 1) to 200 Gb/s (OC-3840) capacities. The underlying reason behind the motivation of this IP over SONET which is the need of the new network capacity who leads the network planners to invent new technology capable of dealing with increasing in the data traffics, which is transporting IP over wavelength-division multiplexing WDM which is considered as a savior technique for long-term of IP backbone networks since its capabilities of absorbing the growing in capacity [21] . Since the core networks architectures present multi-layer network architectures; optical layer and electronic layer, energy saving is required in both layers. For instance, energy consumption of an IP-over-WDM network can be existed in the switching (routing) level or in the transmission level. In the routing level, energy consumption comes from Digital Cross-Connects (DXC) and IP routers used in switching signal in electronic layer and also Optical Cross-Connect (OXC) which is used for switching optical signal in optical layer. In the transmission level, energy consumption comes from multiplexers to collect signals and demultiplexer to drop them apart [1] . Furthermore, energy consumption in WDM system also comes from components which shown in Fig 3 such as, Transponder is used to transfer and receive signal, Booster is used to recover the loss in power as power amplifier, Pre-amplifier is in charge of amplifying the power of optical signals and the Erbium-Doped Fiber Amplifiers (EDFAs) which is used to amplify optical signals in optical fiber [1].

Since the core networks consume a massive amount of energy in their architectures and also the increase in data traffics during the intensive growing in telecom application services, many recent researches tackled this issue in a bid to invent an optimal solution capable of reducing the power consumption and dealing with the increase in data traffics. Energy efficiency in core networks can be achieved by designing an IP-over-WDM network which bears the minimum energy consumption rates in its transponders, and the IP routers. The authors in [22], investigated the energy efficiency of physical topologies for core networks IP over WDM and developed a mixed integer liner programming model optimizing the physical topologies in order to reduce the power consumption. The optimized full mesh topology reduces the number of IP routers which are the most consumer of energy and transponders by eliminating the intermediate nodes under the non-bypass approach which results in energy saving in the most power consumption. In addition to full mesh topology, another topology eliminating the need of IP router which is Star topology. Furthermore, the numbers of links lower than full mesh topology while more transponders are needed in star topology. Therefore, the star topology consumes power higher than full mesh topology. The results in [22] show that the optimized full mesh topology and star topology present significant power saving results of 95% and 92%, respectively. Additionally, energy efficiency in the core network would be achieved by network function virtualization (NFV). The author in [23] proposed an architecture that virtualized the main functions of the mobile core network included P-GW, S-GW, HSS, and MME and provided them on demand and also virtualized the functions of base band unit (BBU) of eNB . The proposed architecture also used gigabit passive optical networks GPON in optical front haul. The results in [23] show that, virtualization in the both IP/WDM and GPON access networks can achieve energy saving of 22% compared to the virtualization only in backbone networks.

Cloud computing:

In order to make the new generation 5G much different from the previous generations, minimizing latency issues will be utterly vital [24]. This means optimize the cloud computing in a bid of quick decisions in mobile devices and other 5G equipment like in autonomous cars which would never wait for long hours to receive data while moving. The US National Institute of Standards and Technology (NIST) has defined a cloud computing as a model for enabling ubiquitous, convenient, on-demand network access to a shared pool of configurable computing resources (e.g., networks, servers, storage, applications, and services) that can be rapidly provisioned and released with minimal management effort or service provider interaction [25][26]. obviously, cloud computing is pool of resources are capable of provisioning the services to multiple users at the same time making a great influence on IT field[27]. Moving the data and computing from the users devices such as PC or laptop into data centers driven the network planners to reach the substantial trend in IT [28]. Cloud computing aims to use distributed resources, pool them to reach higher throughput and be able to provide large scale of computing in order to provision the users’ needs without their knowledge of physical locations of resources and no skills needed to implement or reach the resources [28]. The cloud computing model consist of five essential characteristics, three service models, and four deployment models [25].

The characteristics for Cloud Computing [25][26]

On-demand self-service: Resources such storages and servers are provided to the user whenever the users need them without the need for human association with services providers

Broad network access: The resources of cloud computing are accessible by different heterogeneous platforms such as smartphone, tablets, and laptops

Resource pooling: The resources of cloud computing are pooled to serve clients by using multi-tenancy models, with different virtual machines dynamically assigned and reassigned according to the client’s demand so the pooled resources are frequently based on the use of virtualization technologies.

Rapid elasticity: clients can obtain the resources by scaling them rapidly out and also, they can discharge them by scaling them back according to clients demands.

Measured service: resources of the cloud computing are controlled and monitored by the cloud providers. Controlling and monitoring resources are useful for planning capacities, controlling the access and optimizing the resources.

Service Models

Software as a Service (SaaS): The capability given to the clients to utilize the provider’s applications running on remote servers, this application can be accessed from different client’s gadgets through client’s interface such as web browser or a program interface. The clients does not capable of controlling or managing the fundamental cloud infrastructure such as servers, storages and operating systems[25], [26]. There is no need for installing the application or the programs on user’s devices[29].

Platform as a Service (PaaS): clients can create applications supported by PaaS using different sets of programming languages and also, they can control this application. PaaS provide the capabilities of deploying application on the cloud infrastructure. However. the clients does not capable of controlling or managing the fundamental cloud infrastructure such as servers, storages and operating systems but they can take control of deployed applications [25], [26].

Infrastructure as a Service (IaaS). IaaS provides the clients the capabilities to provide process, networks, storage, and operating systems where the clients are capable of deploying, managing and running different arbitrary software, that included applications and operating systems. IaaS in sometimes is called Hardware-as-a-Service (HaaS)( [29] . The clients do not capable of controlling or managing the underlying cloud infrastructure, but they can take control over servers, storages, operating system. and selected components (e.g., host firewalls) [25], [26].

Deployment Models for Cloud computing[25] :

-Private cloud. Private cloud infrastructure is designed for an exclusive single used such as business organizations or companies containing multiple users. This cloud can be controlled or managed by the organization itself or the third party. It might be placed on or off buildings[25].

-Public cloud. public cloud infrastructure is designed for open used by the general public. This cloud can be managed and controlled by a business organization (e.g., Microsoft, Amazon, and Google)[26], academic organization, or government organization[25].

- Community cloud. Community cloud infrastructure is designed for specific clients from several organizations or companies having same concern could be same missions or same policies [26]. This cloud can be managed and controlled by third party or by one or several organization and It might be placed on or off buildings [25].

- Hybrid cloud. Hybrid cloud infrastructure is designed to use a mixture of two or more deployment models (Private, Public, and community cloud)[25].

In a bid to conserve the low latency and to reduce the power consumption, using mobile edge computing (MEC) to provision the cloud computing in close distance to the user devices instead of sending the commands to data centers located miles away from the users would be vital in fifth generation (5G) networks [30] . Since the advanced technologies and designs in current mobiles devices leaded to evolution in applications, this application obviously demands an explosive computation leading to high energy consumption. Mobile Cloud Computing (MCC) can face the energy consumption and latency issues by utilizing the offloading efficiency technique since the cloud computing in close distance to user devices instead of cloud computing in core network result in reducing the latency and power consumption. The speed of processes is significantly improved if smaller and decentralized servers can provision cloud computing in close distance to the users. This primarily happens considering that there is a reduction in both congestion and strain on the network[30] . In order to minimize the energy-efficient computation offloading (EECO), the authors in [30] propose EECO scheme with the consideration of the energy consumption for file transmission and task computing. The results in [30] show that the proposed design presents a significant power saving of 18 % compared to the scheme without offloading .

FOG COMPUTING

In the recent years, the telecommunication services providers have been witnessing a tremendous growth in data traffics caused by an explosive increasing in telecommunication applications and the number of connected devices. The change of data traffic is expected to continuously grow up in coming years and it is estimated that the number of connected devices to the internet will be 50 billion devices by 2020[31] . Due to the increasing number of connected devices that rely on cloud computing and the physical distance between users and cloud computing , there have been several challenges that have associated with the cloud some of them related to the latency, traffic congestion and power consumption [32]. In a bid to support this growth in data traffics and to overcome the challenges that faced the cloud computing, the telecommunication industry has introduced the new evolution of data computing coping with the growth in data traffic and providing low latency in data process and reducing the power consumption namely as fog computing[33] . The core of the Conventional Cloud Computing relied on data centers that able to process and storage a massive amount of data which is connected with each other through optical networks to represent the data center networks (DCNs) linked to users [33] . Conventional Cloud Computing has been the optimal solution for the past years especially when must deal with a huge level of data. On the other hand, some new generations of applications are in need of real time responses “low latency”, location awareness based on best place for data processing and mobility supporting such as internet of things applications, augmented reality applications while the long distance in some cases between the user devices and the conventional cloud computing could be critical issues faced this applications[31], [33], [34]. Thus, the data commuting process should have been deployed at close distance to user devices for example on an industrial facility floor, over a power pole, close by a railroad track, or in a vehicle to cope with the applications required real time interaction so this is one of the underlying reasons behind fog computing existed [31], [33].

EDGE COMPUTING [fog3]

Edge computing represent the intermediate layer between the Conventional Cloud Computing and the user device to provide the real time responses required by new generation of applications[33]. Edge computing consist of three computing implementations known as, Mobile Edge Computing (MEC), Fog Computing (FC) and Cloudlet Computing (CC) as shown in fig1 [33]. Mobile Edge Computing (MEC) can store and processing data within the RAN (Radio Access Network) in the base stations by deploying intermediate nodes. The servers of MEC are providing real time information on their networks including the capacities and loads and also providing information to users including the network information and user's locations [33]. Cloudlet Computing (CC) rely on specific devices having the same capabilities of data centers placed at close logical area to the users. Cloudlet Computing (CC) can be considered as “data center in a box “or as “standalone cloud” and is able to provide resources to users over a WLAN (Wireless Local Area Network)[33] [34] [35]. Fog Computing is designed as intermediate virtualized layer based on decentralized architecture between the heterogeneous devices (user devices) and Conventional Cloud Computing utilizing a large number of nodes distributed geographically to provide computing, storing and processing data placed at close distance to users to provide the real time interaction required in some application such as augmented reality applications and also the support the mobility [33][35][36].

Open Fog Consortium has defined the fog computing in [37] as A horizontal, system level architecture that distributes computing, storage, control and networking functions closer to the users along a cloud-to-thing continuum . Fog computing is considered as complement not replacement for the conventional cloud computing since its capability of interacting with cloud and enabling the processing at the edge of networks[34].

Differences in Edge Computing Types

Obviously, the three types of edge computing focus on approaching the computing to the network edge. However, there is some differences between three kinds of edge computing have been discussed in [34][32]. One of them is related to the virtualization used in edge computing, the cloudlet just relied on virtual machine (VM) technology while the others can use more than VM technology. Second difference is that the MEC can function in just standalone mode while the cloudlet can function in standalone mode or can connect to cloud computing. However, the fog computing is considered as an extension of the cloud computing. The third differences between them is associated with applications type targeted by these kinds. For instance, Cloudlets targeted the mobile offloading applications , MEC just focus on application with better provision either at mobile edge or non-mobile edge including mobile offloading application, and Fog computing is capable of going beyond the previous two by enabling the applications that can extend to cloud and edge in order to satisfy the application that desired real time response.

Fog Computing Architecture

Since the substantial role of fog computing in low latency and power consumption, its architecture is becoming a significant area to propose an optimal architecture corresponding with its goal. Most of proposed architecture have tackled the essential three layers structure which is end-user layer which consist of user devices , fog layer that include fog nodes (routers, gateways, switchers, access points, base stations, specific fog servers ) and cloud layer that includes the conventional nodes of clouds computing as shown in fig 2 [38] . Open Fog Consortium has determined in [37] the infrastructure of fog computing capable of enabling building Fog as a service (FaaS) in case of handling certain issues of business which consists of Infrastructure as a Service (IaaS), Platform as a Service (PaaS), Software as a Service (SaaS), and many service constructs specific to fog .

Goals AND Challenges of Fog Computing

The goals behind designing the fog computing consist of, latency which is essential goal to provision the users applications that required low latency, efficiency which is providing the efficient resources and energy efficiency since some of nodes have limited computing power and memory, and generality which is aiming to use same abstract to cover application layer and services since the fog nodes and user devices are heterogeneous [35]. With a service of fog computing, the new generation of applications, that required a real time response, will be processed and stored at the edge of networks, while traditional applications that not latency-sensitive can be processed and stored at the cloud computing[34] . However, there is been some challenges counter the fog computing include, the type of virtualization technique since the performance of node depends on the chosen type of virtualization technique, data aggregation caused delay if the aggregation of data uncompleted before date processing and also the resources provisioning might be considered as a big challenge since some resources are limited nodes so the fog computing is in need of optimal ways to overcome this challenges[35].

Fog Computing Benefits

Fog computing offer solutions to the challenges experienced in cloud computing with a massive growth in data traffic. Compared to cloud computing, fog stands outs along three major dimensions. These include: carrying out data storage near or at the end user instead of storing them only in remote data centers, carrying out control and computing functions near or at the end user instead of performing them at remote data centers and carrying out networking and communication at or near the end user instead of routing all network traffic through the backbone networks [39]. Obviously. The above three dimensions significantly enable low latency and obtain real time interactions that required in time- sensitive applications. Moreover, the power saving is considered as one of the substantial characteristics that fog computing aimed to provide it since its close distance to the customers. The authors in [40] proposed fog computing architecture and tested its performance in the case of time-sensitive applications such IOT applications. The results indicated that both latency and power consumption of fog computing running this application is low in compared of cloud computing. Furthermore, the authors in [41] investigated the power consumption in fog computing using distributed servers namely Nano Data Centers capable of hosting and distributing content to users in peer to peer mood . In order to study power consumption in Nano data centers, they proposed and used two power consumptions models known by time-based model for unshared network equipment and flow-based model for shared network equipment such as router and switch. There is been some factors emerged in [41] have consumed less power consumption in this decentralized servers as compared to centralized servers such as the type of access networks linked to fog computing , fog server’s time utilization ( proportion of the idle times to active times), and the type of applications operating on fog computing that include number of downloads and updates. The author results indicate that the Nano data centers known by fog computing can operate as a complement to centralized servers to serve some certain application, most of them in need of real time response such the IOT application and also Off-loading this application from centralized servers to decentralized servers lead to energy saving.

DEMONSTATIVE USE CASE

In order to demonstrate the benefit of fog computing, this review discusses the one of illustrative use cases in fog computing in [34] which is latency in content delivery networks (CDN). A CDN is designed to submit the required content (e.g. streaming video) to the customers in efficient way with low latency. Content Delivery Networks consist of two servers, one of them to store the origin video and the second one to replicate it which is surrogate server, and controllers which is in charge of selecting the suitable surrogate servers in order of distance, networks conditions and content availability. Additionally, CDN uses access points such as the base station in order to submit the contents to the customers. The surrogate servers used in conventional cloud computing might be placed too far from the customers resulting delay in the required content. A proposed solution to overcome this delay is to implement fog computing between the cloud stratum and customers as shown in fig 2 so the popular contents could be stored at fog stratum in proactive and reactive mode. For instance, the content that Mike requested is stored in proactive mode at Fog stratum, this content pushed by surrogate servers to the access point that mike linked to as shown in (fig 2 , action 2). The resulting delay is short compare to the conventional cloud computing. Assuming that, Mike moved to different access point such the base station B as shown in (fig 2, action 4). The base station A will replicate the content on base station B in a reactive mode in case the content has not stored yet in proactive mode by surrogate servers. The result of delay is reduced compare the case that surrogate servers instead of base station replicated the content on base station B in reactive mode [fog 8].

References

Y. Zhang, P. Chowdhury, M. Tornatore, and B. Mukherjee, “Energy Ef fi ciency in Telecom Optical Networks,” IEEE Commun. Surv. Tutorials, vol. 12, no. 4, pp. 441–458, 2010.

“Vision and Roadmap : Routing Telecom and Data Centers Toward Efficient Energy Use,” 2009.

A. Hammadi, “Future PON Data Center Networks,” University of Leeds, 2016.

H. S. Abbas and M. Gregory, “The next generation of passive optical networks: A review,” J. Netw. Comput. Appl., 2016.

D. Hood and L. Lu, “Current and future ITU-T PON systems and standards,” in 2012 17th Opto-Electronics and Communications Conference, 2012, p. 125.

Z. T. Al-azez, “Optimised Green IoT Network Architectures,” univrtsity of leeds, 2018.

R. R. K. N. S. G. H. Sasaki, A Practical Perspective , Third Edition. .

F. Effenberger et al., “An introduction to PON technologies [Topics in Optical Communications],” IEEE Commun. Mag., vol. 45, no. 3, pp. S17–S25, 2007.

R. van der Linden, “Adaptive modulation techniques for passive optical networks,” Technische Universiteit Eindhoven, 2018.

D. J. Shin et al., “Hybrid WDM/TDM-PON with wavelength-selection-free transmitters,” J. Light. Technol., vol. 23, no. 1, pp. 187–195, 2005.

C. Kachris and I. Tomkos, “Power consumption evaluation of hybrid WDM PON networks for data centers,” in 2011 16th European Conference on Networks and Optical Communications, 2011, pp. 118–121.

R. Santitoro, “Metro Ethernet Services – A Technical Overview What is an Ethernet Service ?,” Metro, pp. 1–19, 2003.

Cisco, “Broadband Network Gateway Overview,” pp. 1–10.

Metro Ethernet Forum, “The Metro Ethernet Network Comparison to Legacy SONET / SDH MANs for Metro Data Service Providers,” 2003.

I. Cerutti, L. Valcarenghi, and P. Castoldi, “Power Saving Architectures for Unidirectional WDM Rings,” Opt. Fiber Commun. Conf. Natl. Fiber Opt. Eng. Conf., p. OThQ7, 2009.

X. Dong, T. E. H. El-Gorashi, and J. M. H. Elmirghani, “On the Energy Efficiency of Physical Topology Design for IP Over WDM Networks,” J. Light. Technol., vol. 30, no. 12, pp. 1931–1942, 2012.

D. Puthal, B. P. S. Sahoo, S. Mishra, and S. Swain, “Cloud Computing Features, Issues, and Challenges: A Big Picture,” in 2015 International Conference on Computational Intelligence and Networks, 2015, pp. 116–123.

C. Gong, J. Liu, Q. Zhang, H. Chen, and Z. Gong, “The characteristics of cloud computing,” Proc. Int. Conf. Parallel Process. Work., pp. 275–279, 2010.

M. Mukherjee, L. Shu, and D. Wang, “Survey of fog computing: Fundamental, network applications, and research challenges,” IEEE Commun. Surv. Tutorials, vol. 20, no. 3, pp. 1826–1857, 2018.

K. Dolui and S. K. Datta, “Comparison of edge computing implementations: Fog computing, cloudlet and mobile edge computing,” GIoTS 2017 - Glob. Internet Things Summit, Proc., 2017.

C. Mouradian, D. Naboulsi, S. Yangui, R. H. Glitho, M. J. Morrow, and P. A. Polakos, “A Comprehensive Survey on Fog Computing: State-of-the-Art and Research Challenges,” IEEE Commun. Surv. Tutorials, vol. 20, no. 1, pp. 416–464, 2018.

S. Yi, Z. Hao, Z. Qin, and Q. Li, “Fog Computing: Platform and Applications,” in 2015 Third IEEE Workshop on Hot Topics in Web Systems and Technologies (HotWeb), 2015, pp. 73–78.

P. Hu, S. Dhelim, H. Ning, and T. Qiu, “Survey on fog computing: architecture, key technologies, applications and open issues,” J. Netw. Comput. Appl., vol. 98, no. April, pp. 27–42, 2017.

F. Jalali, K. Hinton, R. Ayre, T. Alpcan, and R. S. Tucker, “Fog Computing May Help to Save Energy in Cloud Computing,” IEEE J. Sel. Areas Commun., vol. 34, no. 5, pp. 1728–1739, 2016.

- 24/7 Customer Support

- 100% Customer Satisfaction

- No Privacy Violation

- Quick Services

- Subject Experts