Travelling Sales Person With Profits

Introduction

Traveling Salesman Problems with Profits (TSPs with Profits) are a generalization of the Traveling Salesman Problem (TSP) where it is not necessary to visit all vertices. With each vertex is associated a profit. The objective is to find a route with a profit (maximized) and the travel cost (minimized). Applications of these problems arise in contexts such as traveling salesman problems, job scheduling or carrier transportation. TSPs are the most widely studied combinatorial optimization problems.

In the Traveling Salesman Problem with Profits (TSPP) and time limit, we are given an undirected complete graph g with node set and edge set . We assume that node 1 ∈𝑉 represents the depot and nodes {2, … ,n} ∈ vare the customers. For each customer 𝑖∈𝑉\{1} there is a profit pi associated. The aim is to find a route starting and finishing at node 1 ∈𝑉 in such a way that the total distance travelled is less than t and the total profit is maximized. A feasible solution to the problem is one single route starting and finishing at the depot, which visits a subset of customers and the total length of the route is less than t. This problem arises in last mile logistics, where the porter needs to decide which deliveries he/she is willing to do.

HISTORY:

The origins of TSP are unclear. TSP problem was mathematically formulated in the 1800s (W.R. Hamilton & Thomas Kirkman). Hamilton’s Icosian Game was a recreational puzzle based on finding a Hamiltonian cycle. The general form of the TSP appears to have been first studied by mathematicians during the 1930s in Vienna and at Harvard, notably by Karl Menger.

It was first considered mathematically in the 1930s by Merrill M. Flood who was looking to solve a school bus routing problem. Hassler Whitney at Princeton University introduced the name, travelling salesman problem soon afterward. In the following decades, the problem was studied by many researchers from mathematics, computer science, chemistry, physics, and other sciences.

In the 1960s, however, a new approach was created, that instead of seeking optimal solutions, one would produce a solution whose length is provably bounded by a multiple of the optimal length, and in doing so create lower bounds for the problem; these may then be used with branch and bound approaches.

COMPLEXITY: NP-Hard

TSPP is typical of a large class of hard optimization problems. If there is a way to break this problem into smaller components, the components will be at least as complex as the original one. Such problems are defined as NP-Hard (Non-deterministic Polynomial time) problems.

As stated above there are two ways to solve the problem:

· Optimal Solutions: Optimal solutions or Exact solutions can be found using the branch and bound algorithms. But there is a limitation to the number of cities they can optimally solve.

· Heuristics: These approaches use approximations to help in the selection of the next node and will not always result in an optimal route.

OPTIMAL SOLUTIONS:

1.1 A Dynamic Programming Algorithm

Dynamic programming is a technique used on problems involving a sequence of interrelated decisions, where the goal is to determine the combination of decisions that maximizes overall effectiveness. The key characteristics of dynamic programming problems are: (i) The problem can be divided into stages with a policy decision required at each stage. (ii) Each stage has a state associated with it. (iii) The policy decision at each stage determines the state associated with the next stage. (iv) Given the current state, an optimal policy for the remaining stages is independent of the policy decisions of the previous stages. This is referred to as "the principle of optimality. The Traveling Salesman Problem with Profits can be viewed as a sequential decision problem. Each stage consists of visiting an additional node, and the policy decision to be made is which node, if any, to visit next. The state at each stage is specified by the set of nodes which have already been visited (S) and the node which was visited last (l). Thus, if the current state is (S,/) and the policy decision is to visit node k e_S next, then the state at the next stage is (S + k, k). Given the current state, the optimal sequence of remaining nodes is independent of the sequence used to get to that state.

1.2 A Branch and-Bound Algorithm

Branch and-bound algorithms are implicit enumeration techniques which iteratively reduce the number of feasible solutions that must be examined. They rely on the press of repeatedly breaking the set of feasible solutions into subsets (branching), and calculating bounds on the value of all feasible solutions contained within them (bounding). During the branch-and-bound process, the best feasible solution found thus far is called the incumbent. A heuristic algorithm is often used to generate an initial incumbent. For a 60 maximization problem, the set of all feasible solutions is partitioned into two or more subsets and, for each subset; an upper bound on the objective function is obtained for the solutions within that subset. If the upper bound for a subset is slower than the value of the incumbent, the n that subset cannot contain an optimal solution is excluded from further consideration. In the latter case, if the value of the solution exceeds that of the incumbent, the solution replaces the incumbent. A branching rule is not used to select the remaining subsets and partition it further into two or more new subsets. The process is repeated until there are no remaining subsets, i.e. all subsets have been fathomed. In principle, this can be applied to virtually all linear integer programming problems, including TSPP. Presented branch-and-bound algorithm, based on the method of Laporte and Martello [LM], that does exploit the structure of the problem. In their algorithm, Laporte and Martel, partition the set of feasible solutions by specifying the initial sequence of nodes in a sub-tour. Initially, the specified sequence consists of node 1 only. A subset of feasible solutions is partitioned by adding a node to the end of the specified initial sequence. One partition is formed for each node not in the specified sequence has a value greater than that of the incumbent and can be turned into a sub tour without violating the cost constraint; the incumbent is replaced with the sub tour corresponding to the specified sequence. A subset of feasible solutions is fathomed if the cost of the rectified node sequence exceeds the budget, or if an upper bound on the value of a solution containing the specified sequence is less than the value of the incumbent. A subset is also fathomed if it is shown that no additional nodes can be added to the sequence without violating the cost constraint, or if the specified sequence contains all n nodes.

1.3 An Alternate Branch-and-Bound Approach

An alternate branch-and-bound approach is uses the cost-constrained assignment problem for computing upper bounds and uses sub tour elimination for branching. Gensch proposed a branch-and-bound algorithm where upper bounds are computed by solving the cost-constrained assignment problem (CAP) using Lagrangian relaxation, and, when the resulting assignment consists of more than sub tour, partitioning is done using Garfinkel's procedure for sub tour elimination. Using the same ideas, Kataoka and Morito [KM] developed a branch-and-bound algorithm that does guarantee an optimal solution. They use Lagrangean relaxation to find an optimal solution to the linear programming relaxation of CAP. This is then used as the bounding method in a branch-and-bound algorithm for CAP, where partitioning is done by setting a fractional variable to 0 and 1, respectively. If the solution to CAP has a value greater than the incumbent but is not feasible for TSPP, that is, if it contains a sub tour that includes more single node and does not include node 1, partitioning is done by selecting an arc in this sub tour and creating two sub problems, one where the arc is always used and one where the arc is excluded. Otherwise, the sub problem is fathomed. This algorithm has two undesirable features: the embedding of a branch-and-bound algorithm within a branch-and-bound algorithm, and the use of a partitioning scheme that is less efficient than Garfinkel's sub tour elimination procedure. This branch-and-bound procedure can be improved in two ways. First, rather than solving CAP exactly, an upper bound can be computed. Second, using the two assignments, x; ( 2*) and r; ( 2*), produced as byproducts of this upper bounding method.

HEURISTIC SOLUTION:

1.4 Tabu Search Approach

Tabu search, (Fred W Glover, 1986)] and formalized in 1989, is a meta heuristic search[1] method employing local search methods used for mathematical optimization.

Local searches take a potential solution to a problem and check its immediate neighbors in the hope of finding an improved solution. Tabu search uses a local or neighborhood search procedure to iteratively move from one potential solution x to an improved solution x' in the neighborhood of x, until some stopping criterion has been satisfied (generally, an attempt limit or a score threshold). Local search procedures often become stuck in poor-scoring areas or areas where scores plateau. In order to avoid these pitfalls and explore regions of the search space that would be left unexplored by other local search procedures, Tabu search carefully explores the neighborhood of each solution as the search progresses. The solutions admitted to the new neighborhood, N^*(x), are determined through the use of memory structures. Using these memory structures, the search progresses by iteratively moving from the current solution x to an improved solution x' in N^*(x). Tabu search enhances the performance of local search by relaxing its basic rules. First, at each step worsening moves can be accepted if no improving move is available (like when the search is stuck at a strict local minimum). In addition, prohibitions (henceforth the term Tabu) are introduced to discourage the search from coming back to previously-visited solutions.

The travelling salesman with Profits (TSPP) is sometimes used to show the functionality of Tabu search. Since finding an optimal solution is NP-hard, heuristic-based approximation methods (such as local searches) are useful for devising close-to-optimal solutions. A class of strategies associated with Tabu search called ejection chain methods has made it possible to obtain high-quality TSP solutions efficiently

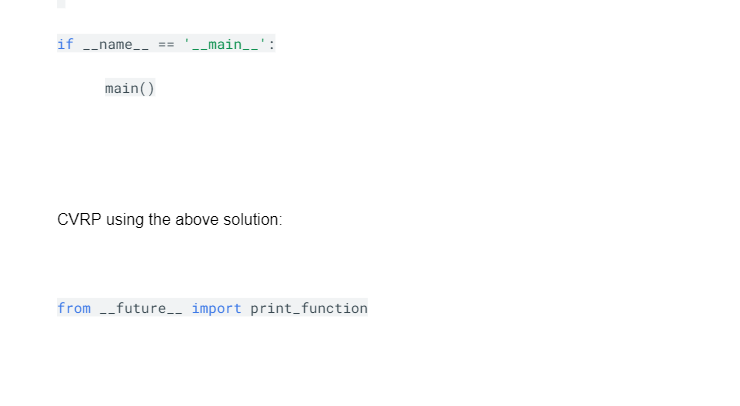

Instances of CVRP

2.1 Vehicle Routing with Pickups and Deliveries

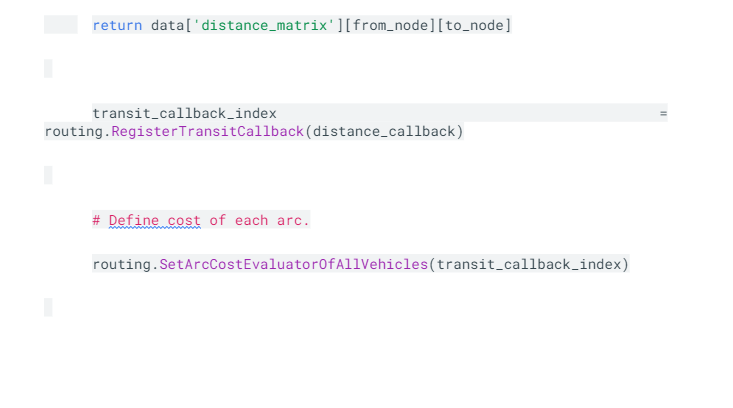

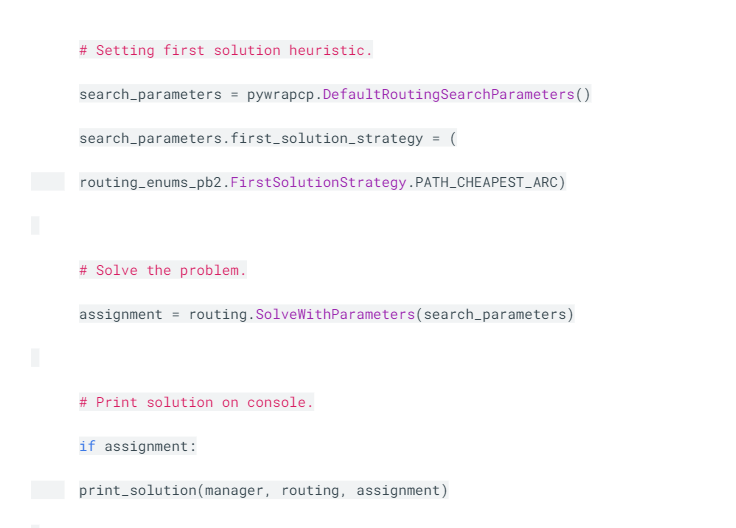

In the Vehicle Routing Problem (VRP), we are given a set of customers with known demands, a set of vehicles (typically homogeneous) and a depot. The problem is to design least-cost vehicle routes originating and terminating at the depot to service customers, subject to vehicle capacity constraints and in some cases there may be route length constraints.

2.2 Multi-Depot Vehicle Routing Problem (MVDRP)

In this problem vehicle starts and ends at the depot by serving a number of customers (in a defined time windows for each one). In the MDVRP, the customers are served by several vehicles; each one is located in one of the several depots established in different places. Each customer i should be served in his time windows that is defined as in which is the earliest arrival time and is the latest arrival time to serve customers. If the customer does not receive service in time windows, the vehicle will be fined.

2.3 Periodic Vehicle Routing problem

The Periodic Vehicle Routing Problem is a generalization of the classic vehicle routing problem in which vehicle routes must be constructed over multiple days. During each day within the planning period, a fleet of capacitated vehicles travels along routes that begin and end at a single depot. The objective of the PVRP is to find a set of tours for each vehicle that minimizes total travel cost while satisfying operational constraints (vehicle capacity and visit requirements).

Construction Heuristic for TSPP

In this section a meta-heuristic for the TSPP is presented using the solution procedure, Tabu search meta-heuristic.

Construction phase

Consider a partial path P, and define the set V¯ as the set of all non-visited nodes, V¯ ⊆ V0. In order to improve the partial path P, a node from V¯ should be added. This process requires two decisions: which node to insert and where to place it in the path. Two factors influence these choices: the profit of the nodes and the extra latency incurred by inserting that node. The place of insertion is then determined based on the improvement in score by adding this node. For a more detailed description and a pseudo-code, we refer to the code in appendix. In preliminary tests, the insertion based method is compared with other construction methods such as a greedy method.

Tabu Search has several similarities with Simulated Annealing, as both involve possible down hills moves. In fact, Simulated Annealing could be viewed as a special form of TS, whereby we use "graduated tenure", that is, a move becomes tabu with a specified probability.

Since the TSPP in the Euclidian plane is NP-hard, the mathematical model can only be solved for small instances. However, to assess the quality of the solutions found by Tabu search, an upper bound is required. We informally sketch here a simple bound. Assume that the number of visited nodes, k, is known. In order to get a lower bound for the latency in case k nodes are visited, we need the k-minimal spanning tree (k-MST). Since solving a k-MST is again an NP-hard problem, we use the minimal k-forest to approximate this. The minimal k-forest of a graph is the sub-graph containing the k −1 shortest edges that do not form a circuit. Each edge of the k-forest is assigned a multiplicity. The longest edge gets 1, the second longest 2, . . . , until the shortest edge gets k. The sum of the distances weighted with the corresponding multiplicities is then a lower bound for an optimal solution to the k-MST. Next, by summing the k largest profits, we get an upper bound for the collected profits. The difference of this upper bound and the lower bound for the k-MST gives an upper bound for the TSPP, under the assumption that k is known. Taking the maximum over k = 1, . . . , n, leads us to an upper bound for the TSPP.

Non-Construction Heuristic for TSPP

The basic idea of Tabu search is to avoid repetition of solutions and to use steepest ascend combined with mildest descend to escape from local optima. For example, the use of multiple neighborhoods requires several Tabu lists, and restricted candidate lists are used for the largest neighborhoods. For a more detailed description, we refer to [8]. First of all, as explained at the end of the previous section, our Tabu search uses the principles of variable neighborhood descent (VND). The neighborhoods are ordered as in Figure 3. In order to speed up the algorithm some restrictions are used to limit the size of the neighborhoods. For move-up (down), swap, 2-opt, and or-opt the maximum number of visited nodes in the path between the move-determining attributes is limited to n/2, with n the number of available nodes. If no improving solution is found in any of the restricted neighborhoods, the best possible neighbor over all the neighborhoods is chosen as next solution. When a number of local optima have been reached, the intensification phase starts. It begins with updating the attribute matrix M. [M] i , j is the number of times that the edge (i, j) was part of the current local optimum. Next, the current solution is used as start solution for a full neighborhood VND without Tabu moves. After the intensification phase, the diversification phase starts. First, the Tabu lists are cleared. Then the attribute matrix is used to penalize frequently used attributes; by subtracting from the score, a given penalty times [M]i,j for each edge (i, j) in the intensification phase solution, we favor unused attributes. In order to find a new and diverse solution, we re-initialize the algorithm, including these penalties for 100 iterations. During this process, the Tabu lists are built up again to prevent a quick return towards the previous solutions. The path that is returned from the diversification phase is used as input for the main part of the Tabu search algorithm. After some diversification phases, the current solution may lie in an area of the solution space far away from the first solutions. By allowing that the penalties become bonuses, frequently used attributes will be favored. Hence intermediate solutions or new promising solutions will be used as current path. This enforces the search since it results in paths that are combinations of very promising solutions. Without this extra feature, the penalties prevent this, and very good solutions can be missed. The next component of the Tabu search is the use and the composition of the Tabu lists. Due to the use of different neighborhood structures, more than one Tabu list is required. In general, each move is added to exactly one list, but moves of more than one type, for remove-insert swap-adjacent move-down move-up swap 2-opt deletion insertion replacement or-opt example insertion and deletion, can belong to the same Tabu list. After each move only the corresponding Tabu list is updated, since otherwise after a deletion and a short re-optimizing, the insertion of that node might already be allowed. By forbidding this, repetition in the long run is prevented. The last aspect of the Tabu search algorithm for the TRPP to discuss is the stop criterion. A balance must be made between computation time and efficiency. The number of consecutive non-improving steps and a maximum computation time determine the stop criteria.

Dig deeper into Theoretical Foundations and Critical Analysis with our selection of articles.

- 24/7 Customer Support

- 100% Customer Satisfaction

- No Privacy Violation

- Quick Services

- Subject Experts